Language exists for two reasons: The inevitability of experience and the need to communicate it. In other words, things happen and we need to talk about them.

From there, language has grown to engage the abstract. It has outgrown its embryonic function and expanded, but it has always been rooted in the real world.

Until now.

Large Language Models (LLMs) learn language only based on language itself, not based on any experiences in the world. As a result, they produce language as an abstraction of the world, not as a mechanism for connecting to it. This is where the complications begin.

The Abstract and The Concrete

When I am cooking with my children, they learn new words. Spatula, colander, wok, etc.

They learn them abstractly- “the spatula is a handheld tool for cooking, used to flip or scrape in a pan. It goes in the drawer with spoons.”

But they also learn them concretely- “This is a spatula.”

Thus, the language learning experience is both conceptual and experiential; abstract and concrete. This is how language works for humans. It is the tool that both describes our experience and connects us to it. Everything we understand through language connects back to our own concrete experience.

So what happens when language exists in the absence of experience? Well, I see 3 steps:

Syntactic Replication

Semantic Approximation

Synthetic Communication

“Syntactic Replication” is the copying of structures, including how words relate to each other. This is the basis for all of the language produced by an LLM.

Semantic Approximation is the appearance of “understanding” that LLMs can produce as a result of massive volumes of Syntactic Replication.

And, with the ability to replicate syntax and mimic “understanding” language, LLMs produce synthetic communication.

This synthetic communication can be extremely useful as a tool, but it also shakes our understanding of language to the core. It forces us to question what the difference between this “synthetic communication” and true human communication really is- what makes us different from the machines?

After my conversation with Lisa Meeden On Merging Minds, I have arrived at some kind of answer. It is an idea rather than a conclusion, and it is bound to change over time.

The difference is in the root of language that I introduced earlier: the connection to concrete experience.

Experience

True communication comes when language reveals a commonly understood experience using commonly understood structures. Good communication ties us back to the truth of our experience, and allows us to share it with someone else. This person then ties our words and experience back to their own.

The machine has no experience, and thus cannot communicate.

It is worth mentioning that true communication is not a given when humans speak either, but merely a possibility. We often "talk past one another"; using language that follows understood structures but does not adequately connect us to an experience. When this happens, we talk but we don't communicate. This is what Large Language Models do as well.

As humans, we can aspire towards true communication, but large language models cannot. Professor Lisa Meeden helped me conceptualize why this is, both technically and philosophically.

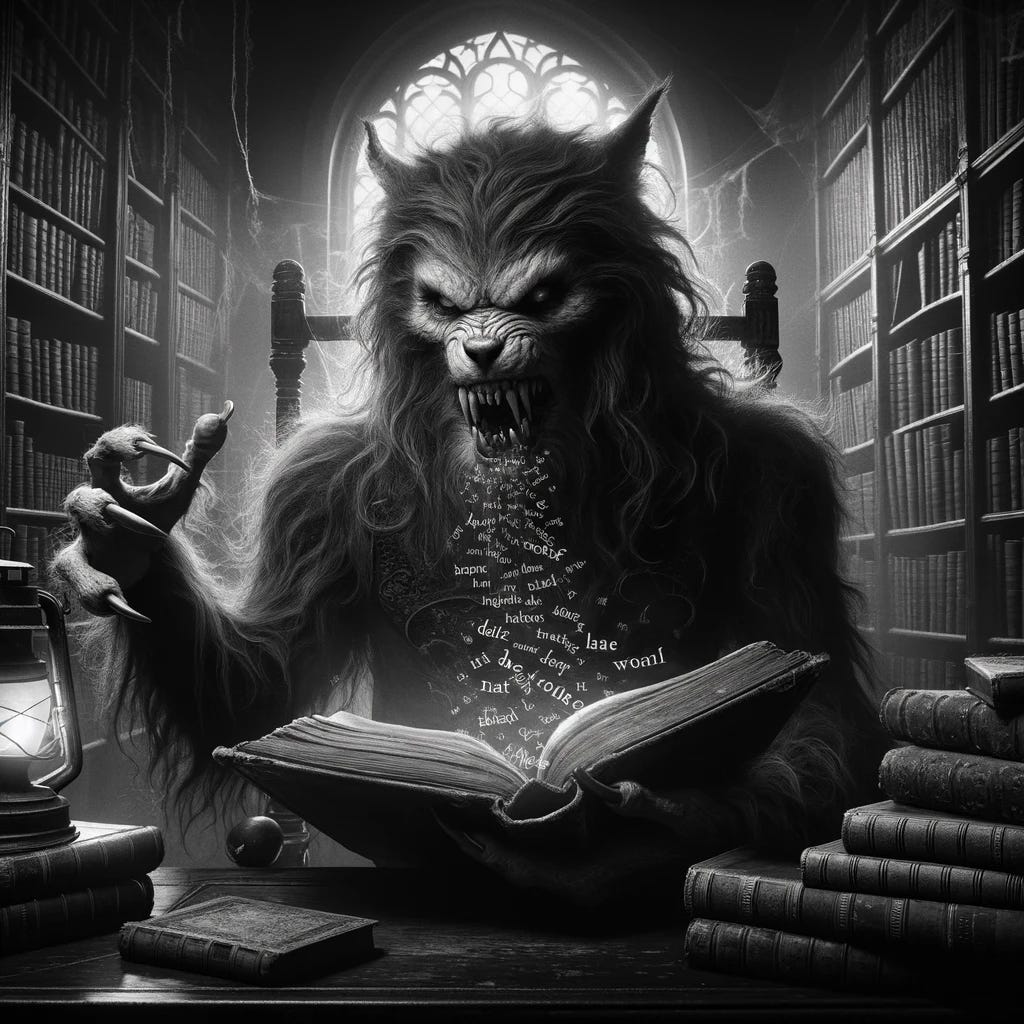

Closet Child

Professor Meeden told me to imagine an LLM as a child locked in a closet from birth, being fed unfathomable amounts of text. The child may learn to replicate and even manipulate the structures they hear, but they will have no concept of how this text is rooted in real experiences.

It is a horrible image, but it does help us internalize where the limits of LLMs lie. The child in the closet wouldn’t produce anything innovative, empathic, or ahead of its time. It simply wouldn't be able to. Large Language Models can't do that either.

In a way, this child (the LLM) is the inverse of the average human. Humans are rich with experiences, but most of us lack the mastery of the common structures (language) to communicate those experiences effectively. The best communicators, gifted writers or orators, are those who can connect the commonly understood structures of language most profoundly to a commonly understood experience. Even if an LLM has the technical capabilities of a top-notch writer, it doesn’t have any experience to reflect in its writing.

So, it will never replace the gifted writer or the elite translator. It won't replace anyone whose experience is crucial to their craft.

In short, a human fails to communicate when words aren’t faithful to their experience; AI fails to communicate because “experience” is absent from its words.

But this doesn't mean that the AI's mastery of structures isn't useful. It can be extremely helpful when paired with the writer or translator's experience.

If an AI’s words don’t reflect experience, what do they reflect?

Thinking about this question is where we can compare my children with the unfortunate child locked in a closet. My children learn what a spatula is by hearing about it and reading about it, but also by holding it, seeing it, and using it. They know what it is both in theory and in practice. The closet child has only read about the spatula, but he has read a lot about it.

You can imagine that if you asked my children and the closet child what a spatula is, their responses would be similar:

“A tool used in the kitchen... etc.”

They would both appear to know what a spatula is, even though only my children would REALLY know. Basically, the closet child’s ability to talk about a spatula would masquerade as an understanding of spatulas.

The same would be true if you asked the child about biology, engineering, love, sadness, or anything. The child would have heard enough about these concepts to discuss them in depth, but they would have no true knowledge. This is exactly the case with LLMs- they can talk about everything without understanding anything.

This level of mimicked understanding is possible because of the sheer amount of language data that these models consume and then replicate. They ingest so much data that their statistical analysis of how words go together looks a lot like semantic understanding. In many instances, substituting statistical robustness for semantic understanding is sufficient to generate quality information. This is why LLMs can be so useful- in many ways they receive inputs and produce outputs like a conscious-being, even though they are not.

This "faux-understanding" is very useful, but its reliance on such large amounts of data does come with an inherent consequence:

An insatiable hunger for data, good or bad.

The DataBeast

Large Language Models need so much data to synthesize language that they cannot be picky about the source. They will swallow up and learn from any data they can find, regardless if it is a world-renowned discovery or an unfounded conspiracy theory. To the LLM, it is all just language.

The barrier this presents is that AI has no clear path to more truthful or verified outputs, and it will be a dangerous tool in future misinformation campaigns. Some organizations are working on “cleaning” their models and having them “unlearn” bad inputs, but this is laborious and slow. Models will be built on bad data much faster than they are cleansed of it.

This means that we, the writers, translators, and thinkers are the guardians of truth when working with LLMs. We can rely on the models for their computational power, but not for their judgment or fidelity.

That is still strictly in the human realm, but we can speed up our application of this discernment by working in tandem with the LLM.

The Takeaway

So how do we think about text that has no root in reality and no assurance of accuracy or truth?

Well, we don’t ask it for anything it can’t give.

We should use LLMs to work faster, stay organized, generate ideas, and double-check for errors. But when it comes down to truth and experience, humans are the guardians.

We are the ones who can tie language back to the world we live in, the ones who can speak to real experiences.

We are the ones who have held spatulas in our hands.

This was a reflection inspired by the conversation I had with Professor Lisa Meeden on the Merging Minds podcast. If you enjoyed the reflection, please subscribe for free using the button above. If you would like to listen to the conversation, please visit the Merging Minds website here, or search for “Merging Minds” wherever you get your podcasts.

If this reflection inspired any thoughts, please feel free to share them in the comments below and invite friends and colleagues to join the conversation.

This is a great article, and a very enjoyable read.