Machine Translation Poisoned our Understanding of AI.

Gen AI has incredible potential, but MT has made it difficult for our community to adopt it with an open mind. How do we push through this?

Note: This is an article I wrote and posted nearly a year ago. Based on some recent conversations I have had, I revisited this article and found it even more relevant than before. I gave some light touch-ups, but the content is the same as when I wrote it. I hope you enjoy it- back to new content next week!

Intro

Our past experience with Machine Translation (MT) directly impacts how we perceive Generative AI (Gen AI). Instead of seeing Gen AI applied to translations as an entirely distinct paradigm, we tend to follow typical human cognition patterns and conflate the unknown with the closest familiar idea.1

Most of us (non-experts) don’t understand how MT works at a granular level. We can’t deeply conceptualize the differences between rule-based vs. statistical, tuning vs. training, and neural vs. adaptive. Maybe we broadly understand the concepts, but most users are not computational linguistic experts. We get the gist of it: Text goes in one language and comes out in another. Neural sounds better than rule-based, and so on.

Source: https://www.freecodecamp.org/news/a-history-of-machine-translation-from-the-cold-war-to-deep-learning-f1d335ce8b5/

When something new like Generative AI is released into the mainstream, we hang on to whatever we can to make sense of it. So, it’s only natural that we make sense of Gen AI in light of its closest relative: Our experience with MT. Real education requires time that most of us don’t have, so we skim through articles, headlines, presentations, and social media posts. As a result, we make quick assessments with information that is shallow and preconditioned at best.

The initial result is tragic:

Translators worried about their livelihoods

Companies thinking about how to cut corners in ways that can be potentially harmful to their brand and greater community

Manifestos against the use of Generative AI in translations

Unfounded privacy concerns

Disinformation and misinformation flying all over the place

The Miracle of MT

Let’s first clear the air. MT is a wonderful, almost magical thing in and of itself. Text in one language in, text in another language out. That is amazing. Like any tech, all its potential (both constructive and destructive) is dictated by how we adopt it as a society.

Over the past twenty years, our use of MT has been largely:

Shallow (Disconnected from technical expertise, strategy, and decision-making)

Disconnected (Research and Development have been deeply removed from actual adoption and User Experience)

Greedy (Focus has been excessively on margins and productivity with little or no attention to the human experience and broader social impacts).

Given how amazing MT is as an invention, I would have expected it to have a more positive legacy up until now.

How MT was Adopted and Understood

Shallow Understanding

MT is great in certain circumstances and potentially perilous in others. If you need to quickly make sense of something someone is saying and can take in potential awkwardness with a grain of salt, it can be life-saving. But if you need to make a critical decision based on detailed information, it could mean death. In reality, most translations fall somewhere in between. They’re neither inconsequential nor a matter of life or death. To deal with this gray zone you need technical acumen and expertise to evaluate a hypothetical MT sophistication gradient:

Raw MT

Tuned MT

Tuned and Trained MT

Tuned and trained MT with some human review

Tuned and trained MT with a lot of human review

Tuned and trained MT with full human review

This gradient can have different combinations, definitions, and permutations, including language combinations and subject matters. It’s just there to illustrate that you can work with MT in a myriad of different ways. And, if you know your content and your processes, you can run tests and make smart decisions as to use the right workflow for the right content. But in the real world, most decisions are shallow, governed purely by financial constraints or by people without the necessary technical expertise.

While one would think this shallowness would improve over time, it hasn’t.

It’s safe to say that we, as an industry, have been ineffective at educating the broader audience. Instead of conveying that MT can be magnificent if adopted correctly, the message has largely been that MT is unreliable. Had we been better at communicating these concepts with a little more depth, chances are that the broader community would have been able to adopt MT with greater efficacy.

Disconnection from User Experience

The primary users of MT are translators (receiving pre-translated files) and end readers. But, most of the Computational Linguists I have talked to over the years work on MT models with an engineering-first approach. The focus was predominantly on BLEU scores and other metrics as opposed to being on the translator or the reader experience.

This disconnection problem persists throughout the entire production chain. Companies outsource to large agencies, which then outsource to translators or other smaller agencies. This means that the bulk of the people actually using MT are typically a few layers removed from decision-makers.

Non-dialogical Adoption

Translator users, for the most part, have been given MT as a suggested translation and then have to work on it in order to reclaim authorship over their text. This creates instant tension between the MT and the translator. The entire premise of MT is non-dialogical- the user is offered a translation and that is it. Outside of specific training scenarios, MT wasn’t adopted as a continuous conversation with an engine.

Excessive Focus on the Bottom Line

As is the natural case for any technocapitalist innovation, the bottom line is some form of profit. It’s up to us as a community to widen the lenses through which we understand and adopt the innovation to turn it into something positive, constructive, and meaningful.

For the most part, MT focuses only on the bottom line, not on process, not on broader social impact. There are two key recurring caricatures that I have seen again and again:

Case 1: The Agency Caricature

Pre-translate it with MT and send it out for translation paying a fraction of the full translation rate. Instead of framing it as a translation ally, MT becomes an enemy of translation quality. With variable results, translators are left for the most part to work with less than optimal haste in order to make up for the pay cut. The tools for effective post-editing are for the most part unavailable and now they have to make sense of MT as a feed that needs to be reconciled with Glossaries and Translation Memories. While one can argue that there are significant efficiencies from using MT as an initial translation draft, you need a fair methodology in place that keeps in mind post-editing coefficients and somewhat accurately assesses effort. For the most part, this wasn’t done. For much of the translation community MT was reduced to the same work for less pay.

Case 2: The End-User Caricature

I’ll just translate this with MT and everything will be fine. Quick, easy, and nearly costless. That is to say until feedback comes pouring in like an avalanche over something that was either incomprehensible, offensive, meaningless, compromising, or a party of some other destructive linguistic inaccuracy. I have seen this happen with videos, contracts, websites, and other content across varying industries and subject matter again and again.

Gen AI

So far, I have argued that MT, which is a good thing in and of itself, was sub-optimally understood and adopted. I believe we are making the same mistake again with Gen AI.

Most people I talk to understand an LLM as an alternative to MT. By framing it in that way we open the door to carry on with the suboptimal implementation legacy we have inherited from MT.

But the way I see it, Gen AI is an entirely different paradigm from MT. For starters, most mainstream LLMs were not conceived, trained, or tested as translation engines. Rather, they were trained as predictive devices. It just so happens that they can translate but that’s not what they were meant for by design.

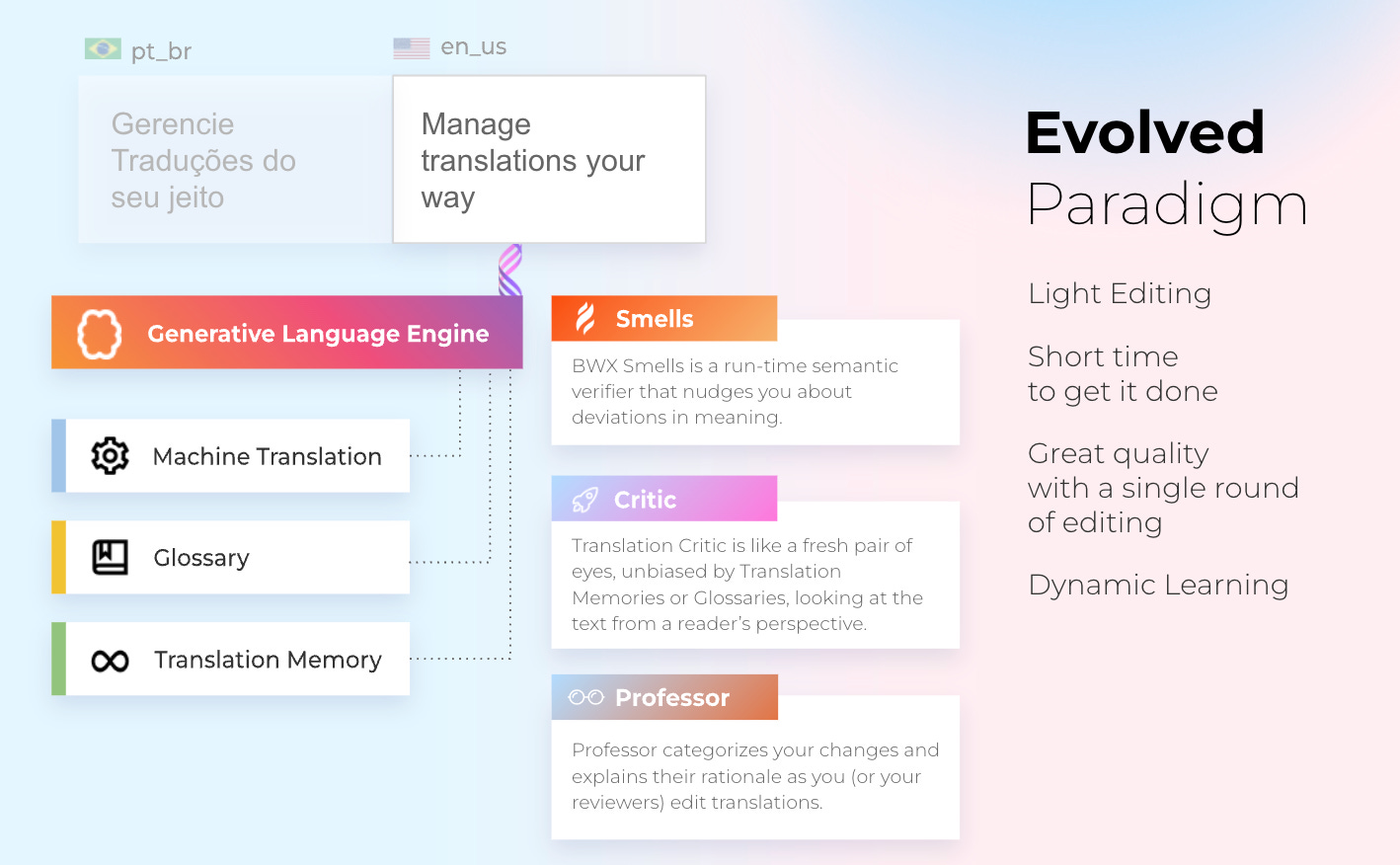

An LLM is able to run a myriad of analyses and inferences that were previously only ascribable to a human being: detecting deviations in meeting, assessing quality, providing alternatives based on a given set of instructions, and abiding by a style guide. The list goes on, but an LLM and the entire Machine Learning paradigm fundamentally opens the door for a different kind of relationship between human and machine: one of dialogue.

Whereas with MT, as a translator was left with a cold feed to fix, LLMs open the door for translators to dynamically interact with refined contextual decisions that keep on learning. The translator is not just a fixer of a feed. They are now working in a continuous linguistic flow with an engine that’s also learning and adjusting according to their choices. The video below exemplifies this in Spanish.

In this light, Gen AI clearly is not a human enhancer but a human replacer. Not a human replacer but a human enhancer. As Bridget Hylak, Administrator of ATA’s Language Technology Division, described in our last BWX Academy Session,

Gen AI in translation is currently closer to a robot that assists a surgeon granting them unprecedented levels of precision and control than it is to anything that will render translators less important or irrelevant.

Gen AI can clearly take care of linguistic grunt work and heavy lifting which opens room for more creativity and intellectual sophistication.

It can also check for semantic errors in runtime allowing translators to go out on more limbs to convey meaning knowing that they have an additional set of eyes that will help them spot distortions, omissions, and other destructive losses of meaning in the translation process.

The translator also becomes a language training supervisor or what we call a Language Flow Architect. This innovation leverages the value of the translation work since good translation work can significantly decrease the required effort from other translators working on similar content for a given company or agency moving forward.

But I see so many people talking about AI as something that is going to replace humans and take away jobs. Not a creative optimizer, but a destroyer of human value.

Where do we go from here?

Shallowness is expected

Shallowness prevails - most people will understand Gen AI as a GPT interface, MT on steroids, not an entirely different paradigm. That’s not a problem in and of itself. Simply a challenge that must be overcome. Taking all information with a grain or two of salt, and taking the time to go after primary sources and experiences all pay off big time in the long run.

Memes, Hype, and Epic Fails

In the example below, I decided to use Google’s Duet instant subtitling feature. Our Head of Marketing was speaking in Brazilian Portuguese at our All Hands meeting and Google’s AI translator completely botched it. He was talking about how happy he was to be with everyone and Google subtitled it for me adding a Chinese Character and a “full mouth a penis on batch papu”. I didn’t know where to hide…

Examples like this will be commonplace. They will be capitalized on, and preyed on. Instead of trying to understand what’s behind this debacle and how to make it better, things quickly become memes. And once a meme, it’s etched for as long as eternities last in the internet's collective consciousness.

In this case for instance a deeper analysis will reveal that the translation fiasco was partly due to the fact that I incorrectly set the speaker’s language as English. The tech should have done better, but so should the user; in this case, me.

But the opposite is also true, 7.6 Million people (and counting) have seen a person being automatically translated with seamless lip-synching. Many of them believe the tech is there.

The truth though is that this was not instant (as the correction shows). It was processed asynchronously. While the tech remains cool, it’s way oversold and the speaker is using simple words with clear enunciated dictation. Viewers who are not translators are inclined to believe translation has already become obsolete. As techies love to say, “It’s a solved problem.”, only that it’s not.

A Strong Technically Savvy Community is Key

Little dialogue, shallow understanding, and a fragmented sense of community all contributed to a less-than-optimal outcome with MT. If we don’t change we will make different but analogous mistakes all over again.

The Golden Opportunity

Dialogue, mutual understanding and building together aren’t just collective utopian ideals. They are real possibilities of the boundless virtual networks that currently tie us all together. If translators don’t idly stand by as downstream service vendors, but instead take an early adoption pro-active stance on the tech, they will have a significant head start. Five years from now I can see an outcome where people are translating their content left and right with GAI, where translators are paid even more laughable rates and the profession has become an archaic relic.

But I can also envision an outcome where there is a lot more content produced, translation standards are significantly higher, and translators are more recognized for their intellectual and cultural prowess. It really is up to us as a community to determine this outcome. And the only way to have a say in this is to experiment with the tech, incorporate it into your craft, and build a strong community together through dialogue and mutual understanding.

George Lakoff, Women, Fire, and Dangerous Things (Chicago: University of Chicago Press, 1987),

Great, balanced article and take on this matter

We have rule-based, statistical, neural, adaptive, phrase based blah bla bla.. Machine Translations in market. AI the Artificial intelligence may be Machine Translations or AI translation base is tuning and training

Today also, main aspect of AI Translation or MT is to translate no subject matters. This making a very strong impact on Subject Domains. Content in specific domain results are well below standard

In other words it is not that, “Machine Translation Poisoned our Understanding of AI” but, MT and AI has a lot scope of development in specific domains